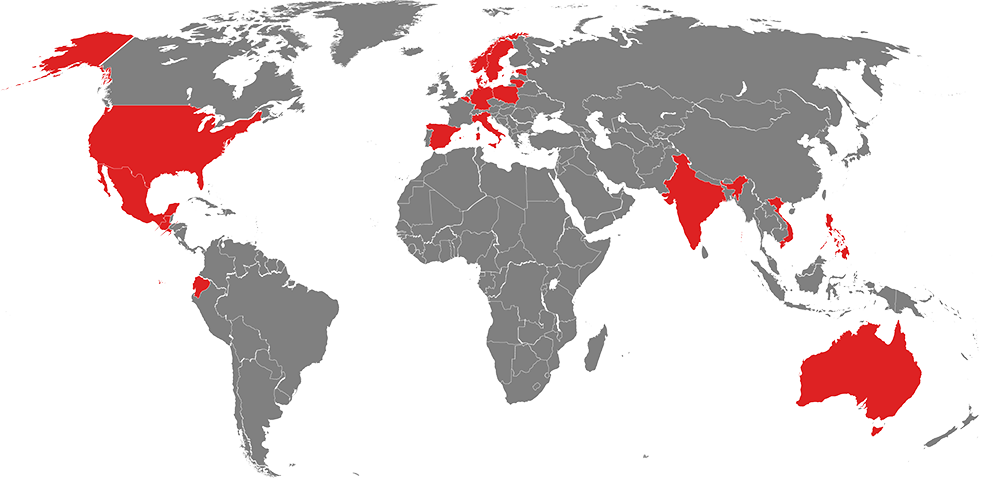

3D Point cloud on Image

“A picture is worth a thousand words”, but not to a computer. How to infer a context into an array of pixels? Object recognition and detection is one approach. The next step is to find a relationship of distinct objects on the screen. This sounds like an easy task in both X and Y dimensions if we know the centroid or a polygon that describes individual objects. It becomes slightly more complicated when we want to ask a computer whether one object is behind or in front of another. Computers still struggle with the perception of depth.

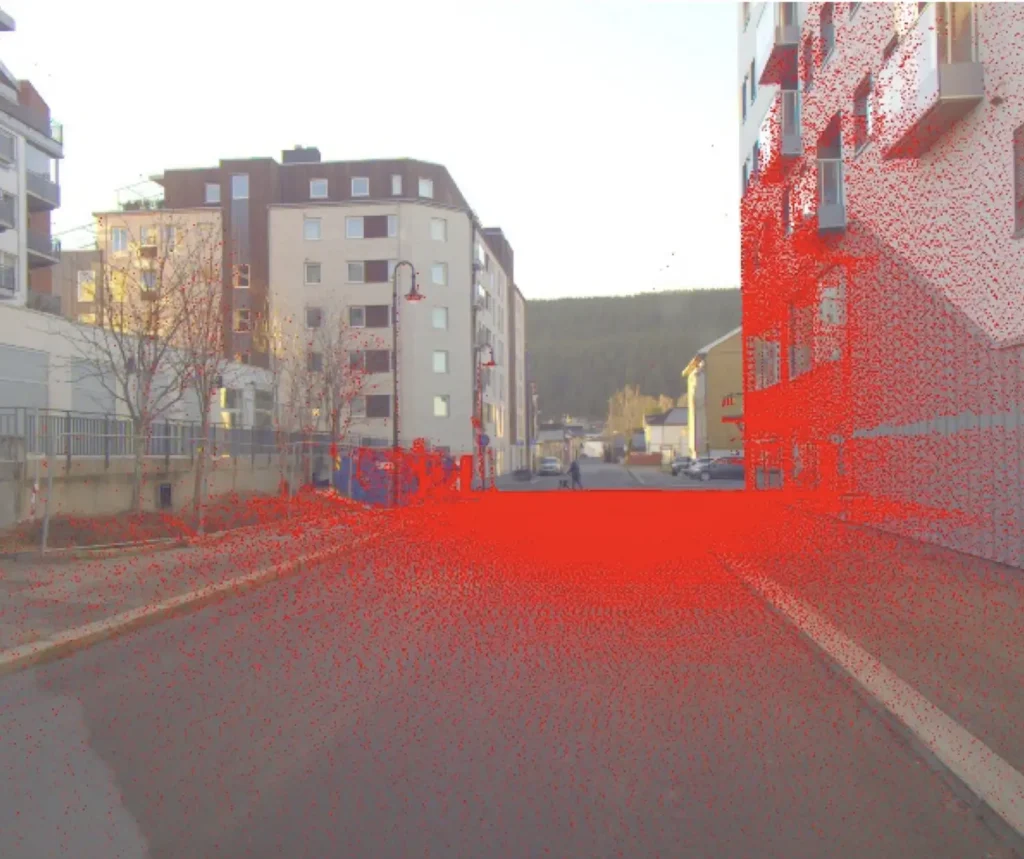

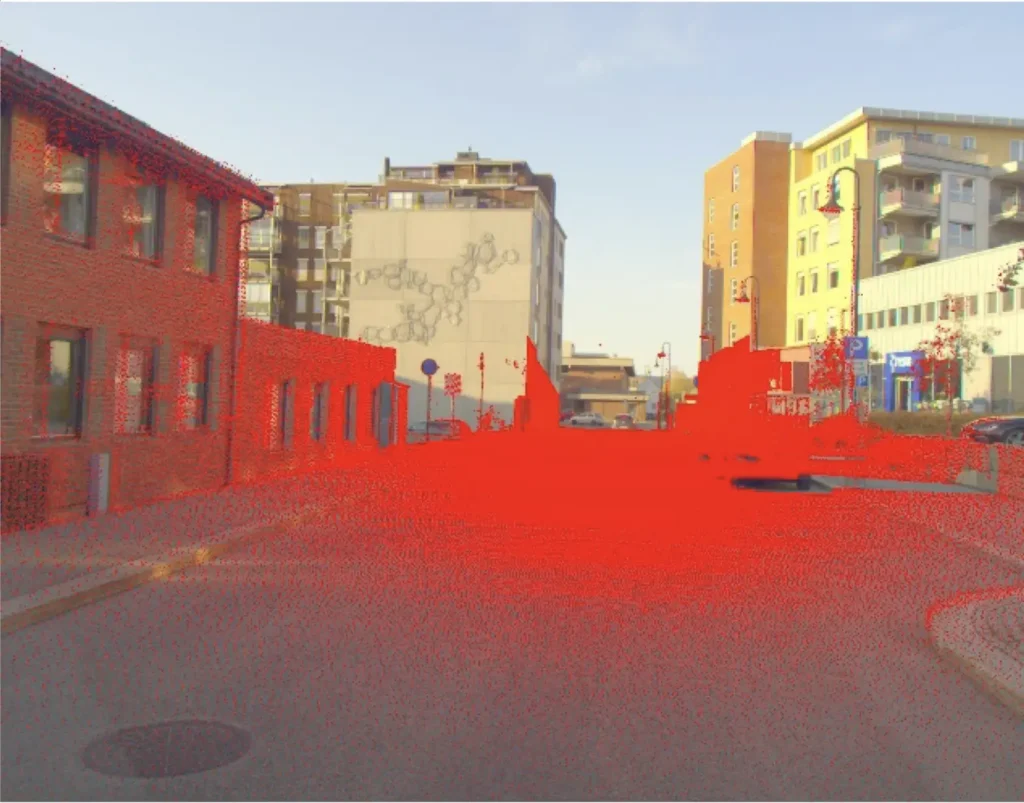

Currently, the most advanced image depth systems use LiDAR solutions (take a look at the latest iPhone) because estimating depth from an RGB or a grayscale image is not yet possible at a scale suitable for commercial products. The solution is to fuse an RGB image with LiDAR data. Since most of the standard cameras do not have a LiDAR sensor integrated into them, the LiDAR has to be placed somewhere else on a device or a system, like the 3D Mobile Mapping System (ViaPPS) from ViaTech AS, introducing differences in physical location and orientation between the two sensors.

ViaTech provides solutions for finding correspondences between different sensors which allows to perform data fusion for a downstream task, such as finding distances between objects in RGB images. ViaTech achieves good calibration by finding correspondences through optimisation between LiDAR data and RGB images. Currently, the calibration requires manual input from an operator that is done only once and can be adjusted if needed. However, ViaTech strives to achieve a completely automated sensor calibration that could be run in real time and compensate for the deviations that could arise due to temperature changes and vibrations as the system is exposed to various real-life situations.

By Boris Mocialov, Ph.D. Computer Vision Engineer

RGB image from the VPS system with the corresponding LiDAR points from the Velodyne sensor.